In a tier list of the most exciting PC components, GPUs are easily S-tier. Whether you're a tech enthusiast or just the average gamer, it's hard to not get swept up in the hype of a brand-new release (well, maybe not anymore). Most understand the GPU as the part of a computer that runs games really well, but there's much more to it than that. Here's everything you need to know about GPUs and why they're one of the most unique components in a computer.

What a GPU is and how it works

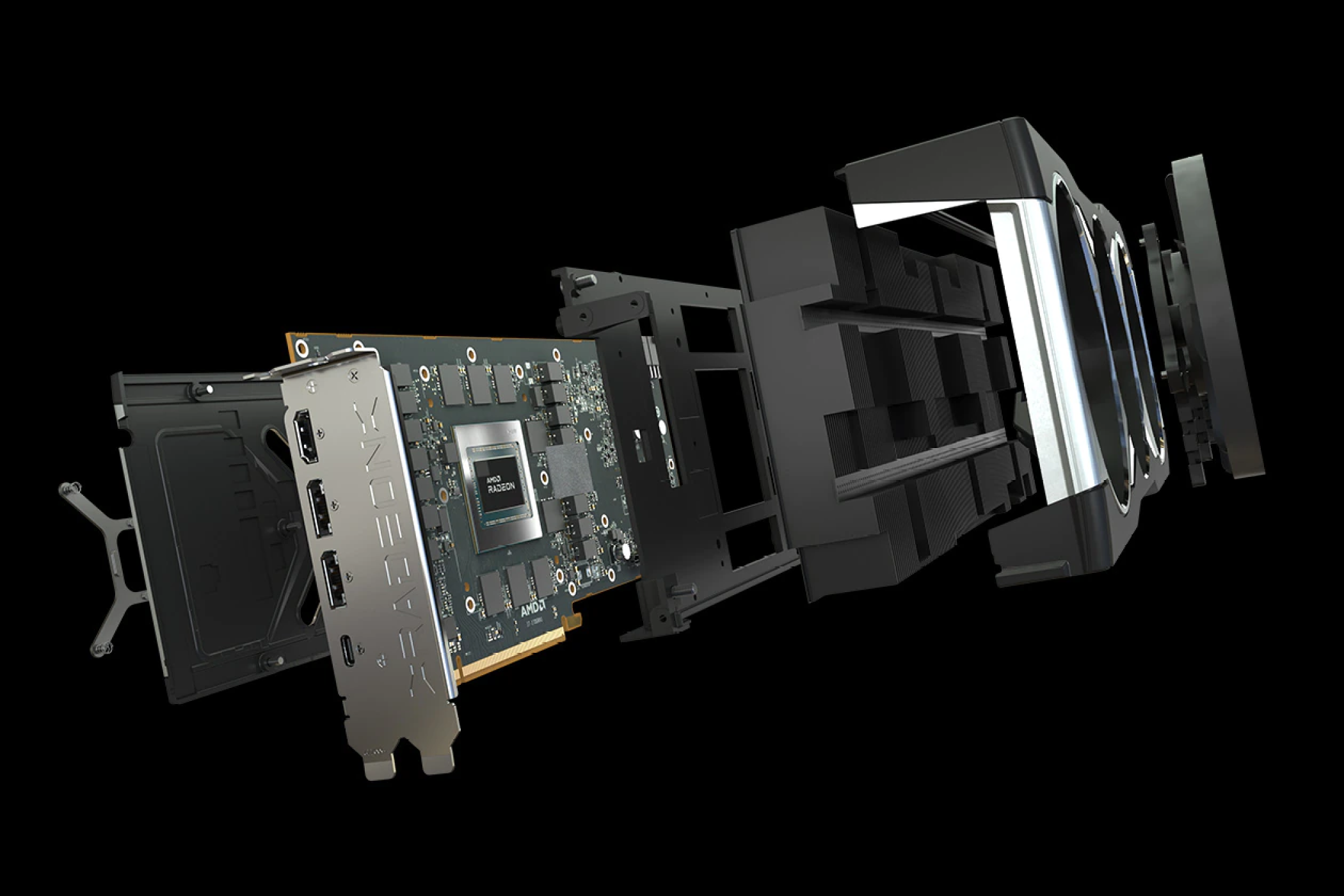

First, let's clear up what exactly a Graphics Processing Unit (GPU) is. Basically, it's a processor that uses lots of individually weak cores called shaders to render 3D graphics. GPUs can come in all sorts of forms: integrated into a CPU (as seen in Intel's mainstream CPUs and AMD's APUs), soldered onto a motherboard in devices like laptops, or even as a part of a complete device you can plug into your PC known as a graphics card. Although we often use the words GPU and graphics card interchangeably, the GPU is just the processor inside the card.

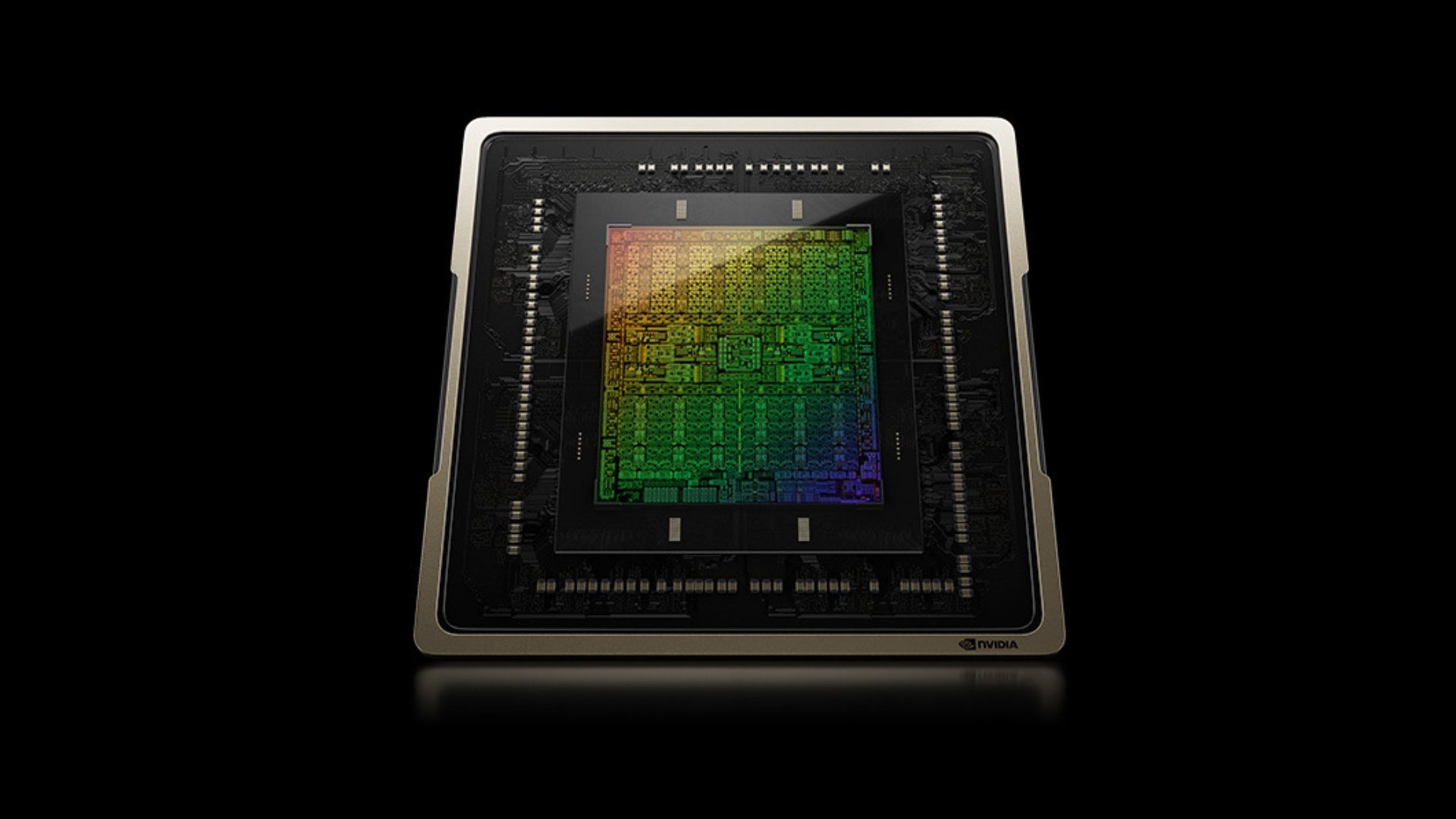

The key distinction between GPUs and CPUs is that GPUs are usually specialized for 3D tasks (playing video games or rendering, for example) and excel at processing workloads in parallel. With parallel processing, all the cores are tackling the same task all at once, something that CPUs struggle at. CPUs are optimized for serial or sequential processing, which usually means only one or maybe a few cores can work on a single task at the same time. However, specialization means that GPUs aren't suitable for many things that CPUs can be used for.

The GPU is most famous for being the backbone of the graphics card, a device that's practically a computer unto itself as it comes with a processor, motherboard, dedicated memory called VRAM, and cooler. Not only that, but graphics cards can easily plug into PCs via a single PCIe slot or even through a Thunderbolt port that uses the USB Type C port, which allows graphics cards to connect to laptops too. There's really no other component like the graphics card in terms of performance potential and ease of upgrading.

What companies make GPUs?

The origin of the GPU is tied up in the history of video games, which has been the traditional use case for GPUs. It's hard to say when the first GPU was created, but the term was apparently first coined by Sony for its original PlayStation console in 1994, which used a Toshiba chip. Five years later, Nvidia claimed its GeForce 256 was the first ever GPU on the grounds that it could perform the transform and lighting tasks that were previously done on the CPU. Arguably, Nvidia didn't create the first GPU, and it wasn't even the first graphics card for desktops.

Because the earliest graphics processors were simple, there were tons of companies making their own GPUs for consoles, desktops, and other computers. But as the GPU evolved, which made them more difficult to make, companies began dropping out of the market. You no longer saw generic electronics companies like Toshiba and Sony making their own GPUs, and many companies that did nothing but make GPUs, like 3dfx Interactive, went bust too. By the early 2000s, the wheat had been cut from the chaff, and there were only two major players left in graphics: Nvidia and ATI, the latter of which was acquired by AMD in 2006.

For over two decades, Nvidia and ATI/AMD were the only companies capable of making high-performance graphics for consoles and PCs. The integrated graphics scene, by contrast, has been more diverse because there are strong incentives to make integrated GPUs (like not having to pay Nvidia or AMD to use their GPUs) and also because it's not that hard. However, Intel entered the high-performance GPU scene in 2022, transforming the duopoly into a triopoly.

What are GPUs used for?

Gaming was always the principal use for graphics cards, but that's starting to become less and less true in recent years as the demand for broader computing power continues to increase. As it turns out, the cores in GPUs are useful for more than just graphics, giving rise to data center and artificial intelligence-optimized chips called general-purpose GPUs (GPGPUs). Demand has gotten so high that Nvidia's data center GPUs make more money for the company than gaming ones. AMD and Intel also seek to cash in on the demand for data center and AI GPUs. Eventually, other companies may enter the race, too, as a new market materializes.

In the case of AI workloads, GPUs have become incredibly important in the likes of AI Image Generation or in the training of Large Language Models (LLMs). This is thanks to their ability to process in parallel being perfect for large matrix operations that are extremely common in AI.

Nvidia vs. AMD vs. Intel: which is best for gaming?

Ever since desktop graphics cards for gaming first became popular in the 90s, one question has been consistently asked ever since: Which brand is the best? Recently, people only asked if Nvidia was better than AMD and vice versa, but now things are a little more complicated with Intel entering the fray. While no brand is completely better than the other, each has their own strengths and weaknesses.

Nvidia has always positioned itself as the option for those who want the best gaming GPU with the most performance and the most enticing features. While Nvidia's graphics cards are usually good, they are also often expensive, at least relative to those from AMD and now Intel. Additionally, while Nvidia's exclusive features are cool on paper, the actual experience might not live up to the hype. For example, DLSS is a really nice feature for Nvidia cards, but it isn't in many games and has quite a few drawbacks.

There's really no other component like the graphics card in terms of performance potential and ease of upgrading.

AMD, by contrast, hasn't always competed with Nvidia. AMD has historically offered GPUs with better value, which often lets Nvidia's top-end cards go unchallenged. On the other hand, Nvidia often lets AMD have free rein over the budget GPU market, and that's especially true today. Additionally, AMD usually lags behind Nvidia when it comes to features, but it has a decent track record of catching up and sometimes even exceeding its competition.

Intel has only been in the gaming GPU market for about a year at the time of writing, so it hasn't really established itself yet, but so far, Intel has competed on the value AMD usually does. The biggest struggle for Intel has been its drivers, or the software that plays a crucial role in how well a GPU performs in games. Since its first-generation Arc Alchemist GPUs launched in 2022, Intel has been hard at work optimizing its drivers, and today Intel's Arc A750 is a popular choice for low-end and midrange PCs.

It's pretty clear where each company excels. Nvidia is the top choice for those who have a big budget and want both great performance and cutting-edge features. AMD is the brand for more people, and although AMD's top-end cards usually don't quite match Nvidia's in performance and features, it's also true that Nvidia hasn't made a sub-$300 card since 2019. Intel, at the moment, offers a good midrange GPU, but that's about it, though upcoming cards might change things.

In general, the GPU market is set for a change over the next few years, with Nvidia moving even more to the high-end segment and Intel entering the fray. We'll have to see how this continues to shape up.